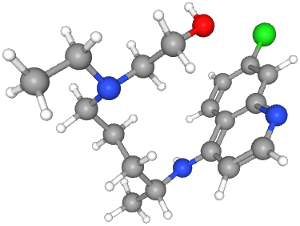

About that Hydroxychloroquine for COVID-19 trial

In the past days there was some hype about an old Malaria drug, Chloroquine and the closely related Hydroxychloroquine, and whether it might help against Covid-19. Particularly Donald Trump indicated he thinks the drug is promising and Elon Musk tweeted about it.

In the past days there was some hype about an old Malaria drug, Chloroquine and the closely related Hydroxychloroquine, and whether it might help against Covid-19. Particularly Donald Trump indicated he thinks the drug is promising and Elon Musk tweeted about it.Hydroxychloroquine showed promising results in an in vitro study, but that is obviously no evidence that it will do any good in humans. While more trials are running, the only evidence in humans as of today is a study from a hospital in Marseille, France, but that study is riddled with problems. The trial is still ongoing, but preliminary results have been published in a Preprint on Medrxiv, on the hospital’s webpage itself and in the International Journal of Antimicrobial Agents.

While I understand the basics of a clinical trial, I’d like to point out that I am no medical scientists. Most of the issues here were spotted by other people and I have linked to the sources.

Not randomized

The most obvious issue with this study is that it is not a randomized trial. The study has a control group to which the treatment is compared, but the patients were not randomly assigned. The control group consists of patients from a different hospital and patients who refused the treatment or had other exclusion criteria.

This alone is extremely problematic, because such a selection can introduce all kinds of differences between the two groups that impact the outcome. For example there’s an age difference of more than 10 years between the two groups.

It is therefore possible that the treatment group got better due to other factors.

Surrogate Endpoint

The main outcome the study is measuring is the viral load in patients. This is an example of what is called a surrogate endpoint in clinical studies.

Surrogate endpoints are things measured that are believed to be indicative of a patient’s disease and how bad it is. However they are not what patients really care about. In the case of Covid-19 the most obvious parameter to care about is whether patients survive.

The reason a surrogate endpoint was used here is relatively obvious: It allows faster results, but it has the downside that the results may be less relevant. It is very well possible that a patient with a higher viral load, but mild symptoms may be better off than a patient with a high viral load who needs to be transferred to an intensive care unit.

In a podcast German virologist Christian Drosten discussed this study and raises the suspicion that due to the significant differences of the treatment and control group and the measured viral load it may be that the patients in the treatment group were in a later stage of the disease, which explains the perceived effect. (The podcast is in German, here's the text translated via Google translate.)

But whether that suspicion is true or not: The lack of randomization makes it basically impossible to know whether the measured results were due to the drug or due to patient selection.

Exclusion of Patients

In a comment on PubPeer Elisabeth Bik raises another severe issue. From 26 patients in the group that got the medication six were excluded, because they stopped the treatment. From these six patients three were transferred to an intensive care unit and one died. In other words: The excluded patients didn’t do particularly well.

If patients are excluded from a study this can obviously cause a bias. If a study excludes patients doing particularly bad on the drug this will cause the medication to look better than it actually is.

This effect is well known and the usual way to counter this is to do an intention to treat analysis. What sounds very technical simply means that you collect the results of all patients that you assigned to the medication at the beginning – no matter if you continue the treatment or not. The results of this study contain no intention to treat analysis.

Inconsistency between Trials Registry and reported Result

The main result published by the authors is the viral load of the patients on day 6 of the treatment. The study was preregistered in the EU Clinical Trials Register.

In the preregistration it says that the viral load end point will be evaluated on Day 1, Day 4, Day 7 and Day 14 of the trial. However the main result published is the viral load on Day 6. This is a form of so-called outcome switching.

On the Medrxiv page the authors write that they have followed the reporting guidelines of the EQUATOR project. EQUATOR is an initiative to improve problems with trial reporting and provides checklists for scientists so they follow proper reporting procedures. Yet while the authors claim they have "uploaded the relevant EQUATOR Network research reporting checklist", that is not true. The checklist is not available on Medrxiv.

On Twitter Gaetan Burgio points out another problem: For most of the patients in the control group the viral load was not measured. In the table of the results for many patients only a “positive” viral load is reported, but no exact number. This means the main endpoint of the study was not measured in the majority of the control group.

Another commenter on Pubpeer, David Norris, pointed out that some patients in the table were reported as negative, but had a viral load afterwards. This would indicate that the tests were reporting false negatives – patients that look like they were healthy in a test, but continued to be affected by the virus later.

The paper was investigating Hydroxychloroquine, but it also makes a claim about the chemically similar Chloroquine: “[…] an early clinical trial conducted in COVID-19 Chinese patients, showed that chloroquine had a significant effect, both in terms of clinical outcome and viral clearance, when comparing to controls groups”.

If you follow the cited reference however there is no such trial to be found. The linked publication is a very short PDF making claims about clinical trials being performed in China and it contains a list of references to the Chinese clinical trials registry. If you try to follow up on them you won’t find any results, just trials registry entries about trials that are, if you believe the trials registry, still recruiting patients.

This is an incomplete list of issues raised about this study. Further discussion of various issues with this study can be found in the comment sections of Merdxiv and PubPeer.

There is currently a lot of media hype about Chloroquine and Hydroxychloroquine. Given the current pandemic it is understandable that many people are desperately looking for positive news and potential treatment options. But the treatment options should actually help patients, and only proper scientific studies – and that usually means randomized controlled trials - can show that. Right now we don’t know if Chloroquine or Hydroxychloroquine have any benefit. I don't see how doing poorly performed studys that tell us nothing is of any help.

There are other ongoing studies for these drugs and hopefully some of them will be performed with proper methodology, so we will know better if they have any benefit for Covid-19 patients. Though it’s good to remind oneself that there are far more medications that show promising results in pre-clinical studies than there are medications that actually work. It is a maybe frustrating, yet rational default assumption that most potential drugs don’t work.

Image source: PubChem Identifier: CID 3652

On Friday a

On Friday a